The Road to Tokenization of Commercial Real Estate

How early API-first thinking laid the groundwork for tokenized, programmable assets

From Fragmented Assets to Programmable Markets

At Platform Summit 2019, Ivan Nokhrin presented a talk titled “The Road to Tokenization of Commercial Real Estate”, outlining a vision that, at the time, was still considered largely conceptual. The core argument was simple but structural: tokenization of commercial real estate (CRE) would not be achieved through blockchain technology alone, but through APIs, data standardization, and interoperability.

This article revisits that perspective in light of ideas also explored in the Nordic APIs article How PropTech APIs Will Disrupt Real Estate, and reflects on how many of those early assumptions have since become industry realities.

Tokenization Starts Long Before Tokens

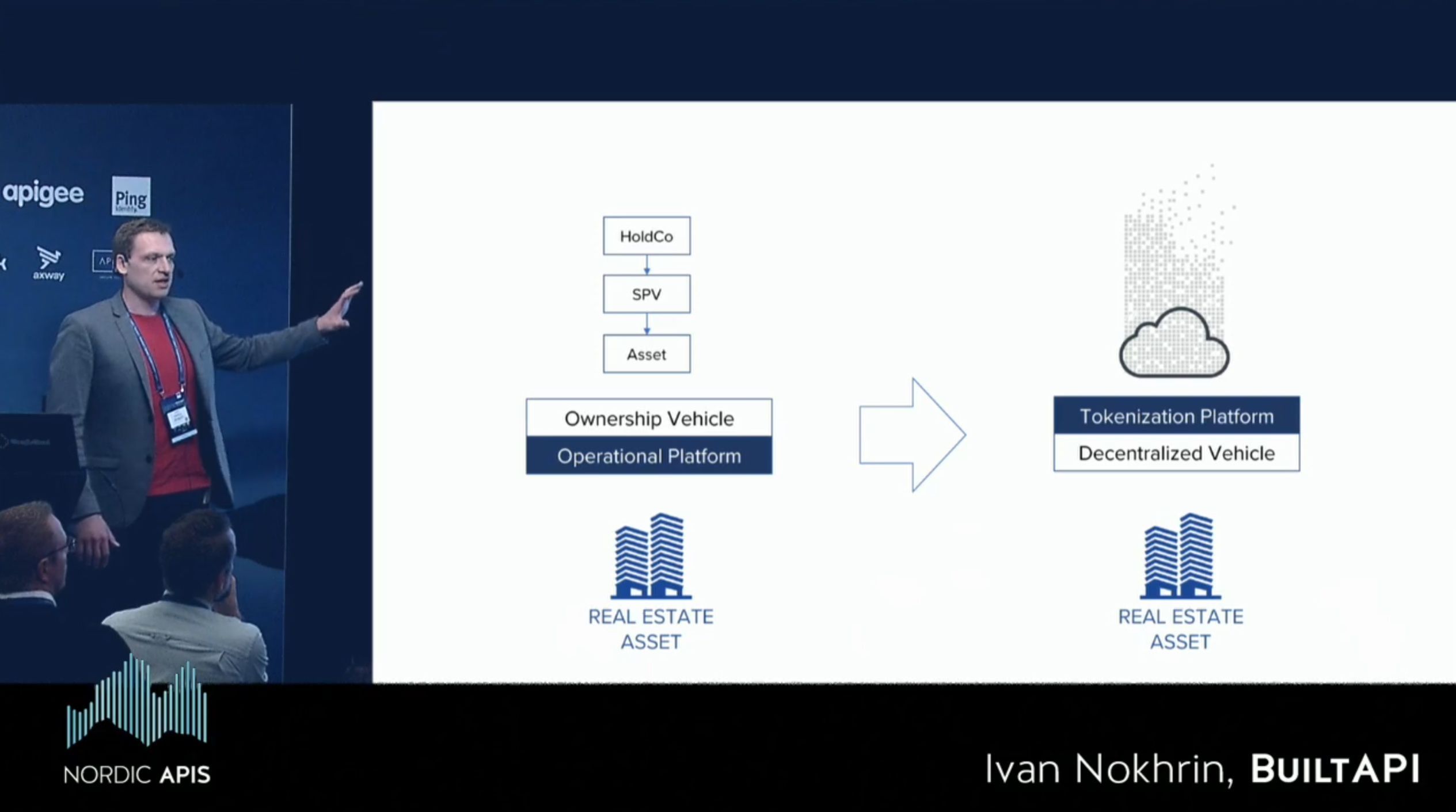

In 2019, much of the discussion around tokenization focused on legal wrappers, distributed ledgers, and fractional ownership models. Nokhrin’s Platform Summit presentation challenged this framing.

The argument was that real estate cannot be tokenized meaningfully unless the underlying asset data is already digitized, structured, and machine-readable. Ownership units, cash flows, leases, valuations, risks, and operational metrics must exist in standardized digital form before they can be represented as tokens.

APIs were positioned as the prerequisite layer, not an implementation detail.

APIs as the Foundation of Digital Assets

As described in the Nordic APIs analysis, PropTech APIs enable real estate data to move across systems, organizations, and value chains. Nokhrin extended this logic by arguing that APIs effectively define the “interface” of a real estate asset.

If an asset’s financials, performance metrics, and legal attributes can be accessed programmatically, the asset itself becomes programmable. Tokenization then becomes a representation of an already-digital object, rather than an attempt to digitize something that remains fundamentally analog.

In this sense, APIs were presented as the true enabler of liquidity, not blockchains alone.

From Static Ownership to Dynamic Participation

A key theme of the 2019 talk was the shift from static ownership models toward dynamic participation. Traditional CRE ownership is slow to change, difficult to divide, and costly to transfer. Tokenized structures promise greater flexibility, but only if the underlying data supports continuous calculation and validation.

APIs allow real-time access to performance indicators, distributions, and risk metrics. This makes it possible to imagine ownership units that update dynamically, respond to operational realities, and integrate with financial systems downstream.

Without APIs, tokenization risks becoming a cosmetic layer on top of opaque assets.

Interoperability Over Isolation

Another central point echoed in How PropTech APIs Will Disrupt Real Estate is that isolated platforms do not scale ecosystems. In 2019, Nokhrin emphasized that tokenized real estate would fail if each platform defined its own proprietary data model.

Instead, interoperability across valuation systems, asset management tools, banking infrastructure, and regulatory reporting was identified as critical. APIs, combined with shared schemas and standards, were presented as the only viable path toward an open market for digital real estate assets.

This thinking anticipated later debates around data sovereignty, vendor lock-in, and composable architectures.

A Long-Term View That Is Now Materializing

Looking back, the “Road to Tokenization” presented at Platform Summit 2019 was less a prediction about technology and more a roadmap for infrastructure readiness. Many of the challenges identified then, fragmented data, lack of standardization, brittle integrations, remain central today.

What has changed is the industry’s awareness that tokenization, automation, and AI are downstream outcomes. The upstream work is data engineering, governance, and interoperability.

Key Takeaways

Tokenization is not a single leap, but a gradual transformation of real estate into a digitally native asset class.

APIs are the mechanism through which that transformation happens. They turn buildings into datasets, datasets into services, and services into programmable economic units.

The road to tokenization runs through infrastructure, not hype.

And that road is still being built.